July 24, 2025

For the past 12 months, we have been building a commercial-grade On-device AI SDK to give you superpowers:

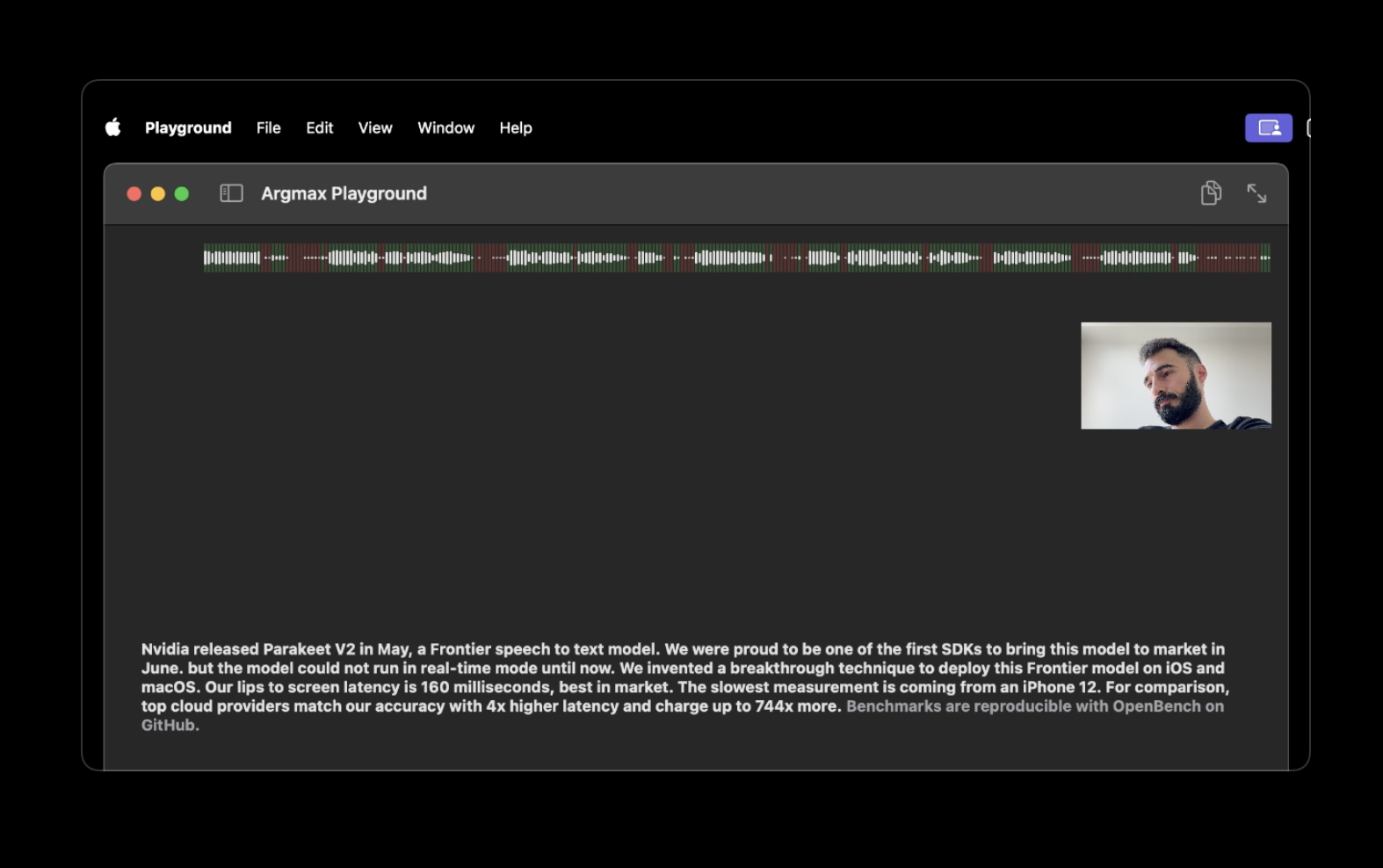

Nvidia released Parakeet v2 in May, a frontier speech-to-text model. We were proud to be one of the first SDKs to bring this model to market in June for a few select apps that millions enjoy daily, but the model could not run in real-time mode... until now!

We invented a breakthrough technique to deploy this frontier model on macOS and iOS. Our lips-to-screen latency is 160 ms, best in market. The slowest measurement is from an iPhone 12! For comparison, top cloud providers match our accuracy with 4x higher latency and charge up to 744x more. Benchmarks are reproducible with OpenBench on GitHub.

Our cloud API competitors charge $0.46 per hour of real-time transcription that is subject to rate limits, concurrency limits and service downtime.

Argmax Pro SDK is $0.42 per month per device, up to 744 hours in a month, and scales without any limits. Our customers build on our SDK for AI meeting notes, medical scribes, video editing and voice agents applications. These apps process dozens of hours of audio per end-user device every month!

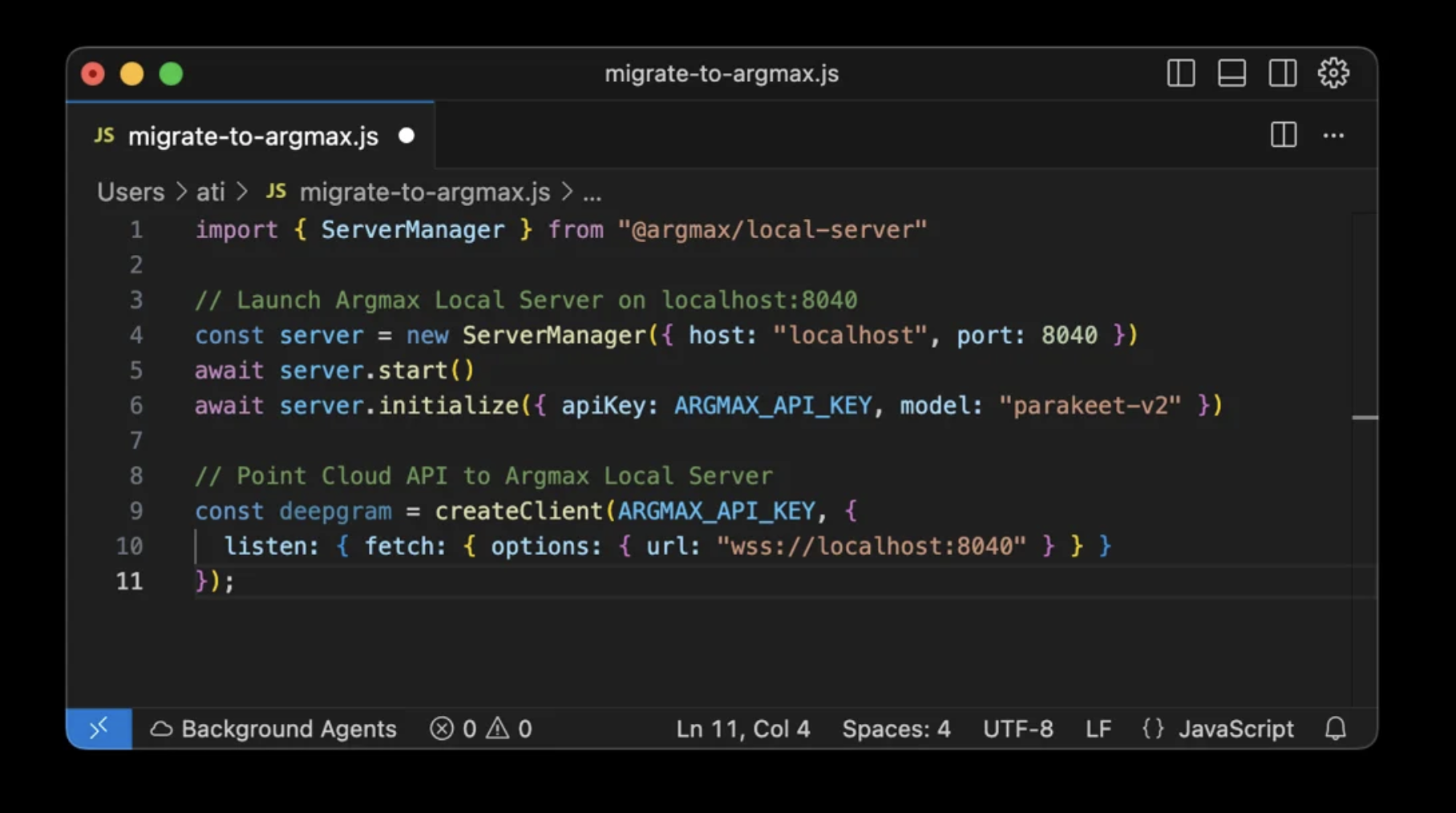

Our SDK is unapologetically platform-native but we also love our customers that have non-native (Electron, Python) apps and we want to serve them as first-class citizens. We heard your integration hurdles with our native SDK and we are thrilled to launch the Argmax Local Server!

Argmax Local Server has the same capabilities as Argmax Pro SDK but it is built to work without SDK integration. It is especially useful for apps already using cloud providers like Deepgram in their apps because the only change required to migrate is to launch the local server with our Node (or Python) package and change the host URL to localhost!

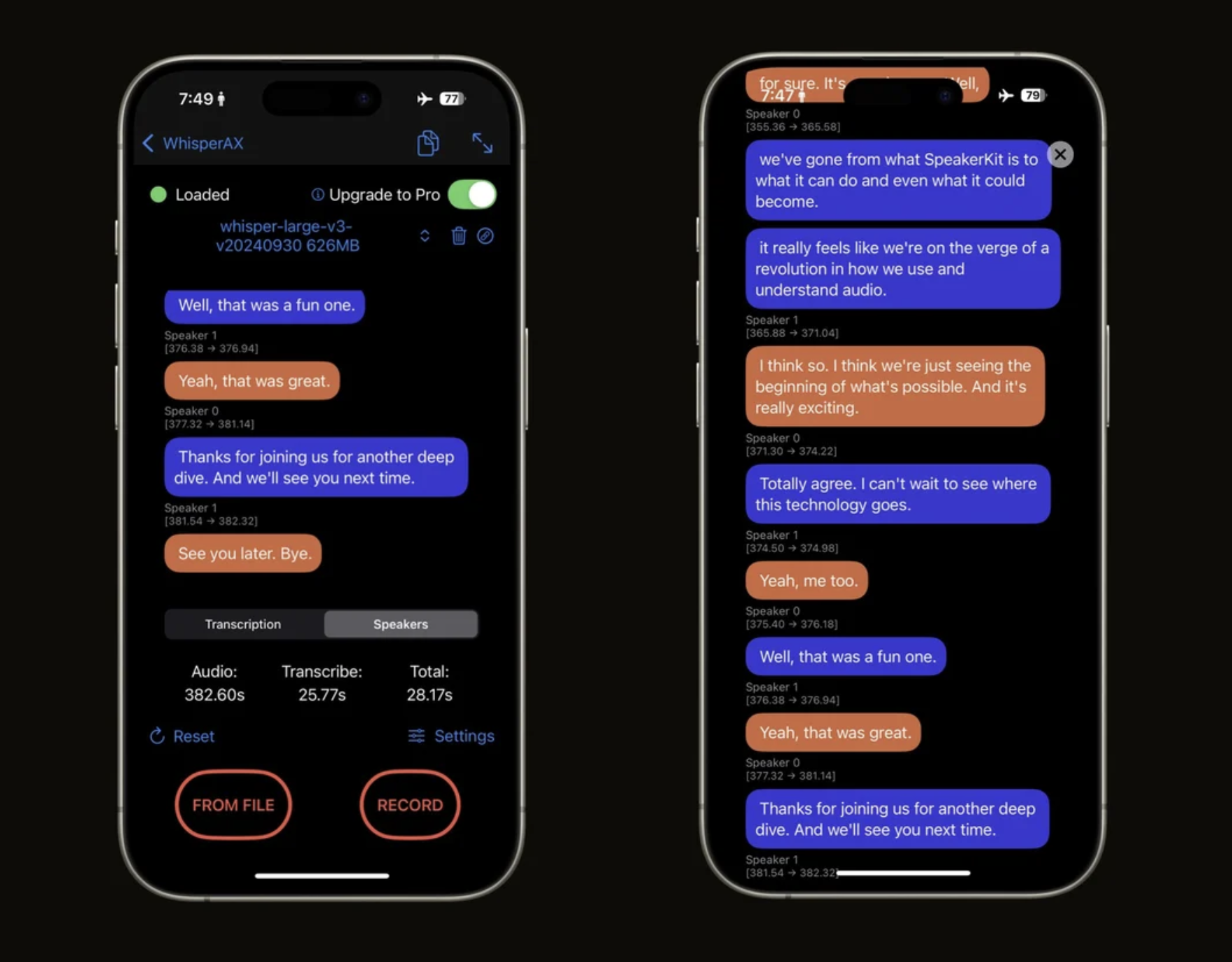

Argmax Pro SDK implementation of the Pyannote 3.1 speaker recognition system is 9.6x faster than the PyTorch implementation. Our benchmarks are reproducible via OpenBench and our results are published in our Interspeech 2025 paper.

What's more, we are proud to be the first to bring the flagship commercial model from pyannoteAI on-device through our partnership. This flagship model provides best-in-market accuracy ahead of all cloud APIs we benchmarked in our paper.

Argmax SDK is generally available on iOS and macOS today and is coming to Android in Q1 2026, building on the powerful Google LiteRT!

You can get started with Argmax Pro SDK today by signing up for a 14-day trial on our Platform today!