August 8, 2025

Stream multiple audio input streams and get multiple real-time text transcript streams without additional memory consumption or slowdown. Multi-stream is required for AI Meeting Notes apps when concurrently transcribing both the system audio, e.g. Google Meet audio from remote participants, and the system microphone, e.g. Google Meet audio for local participants.

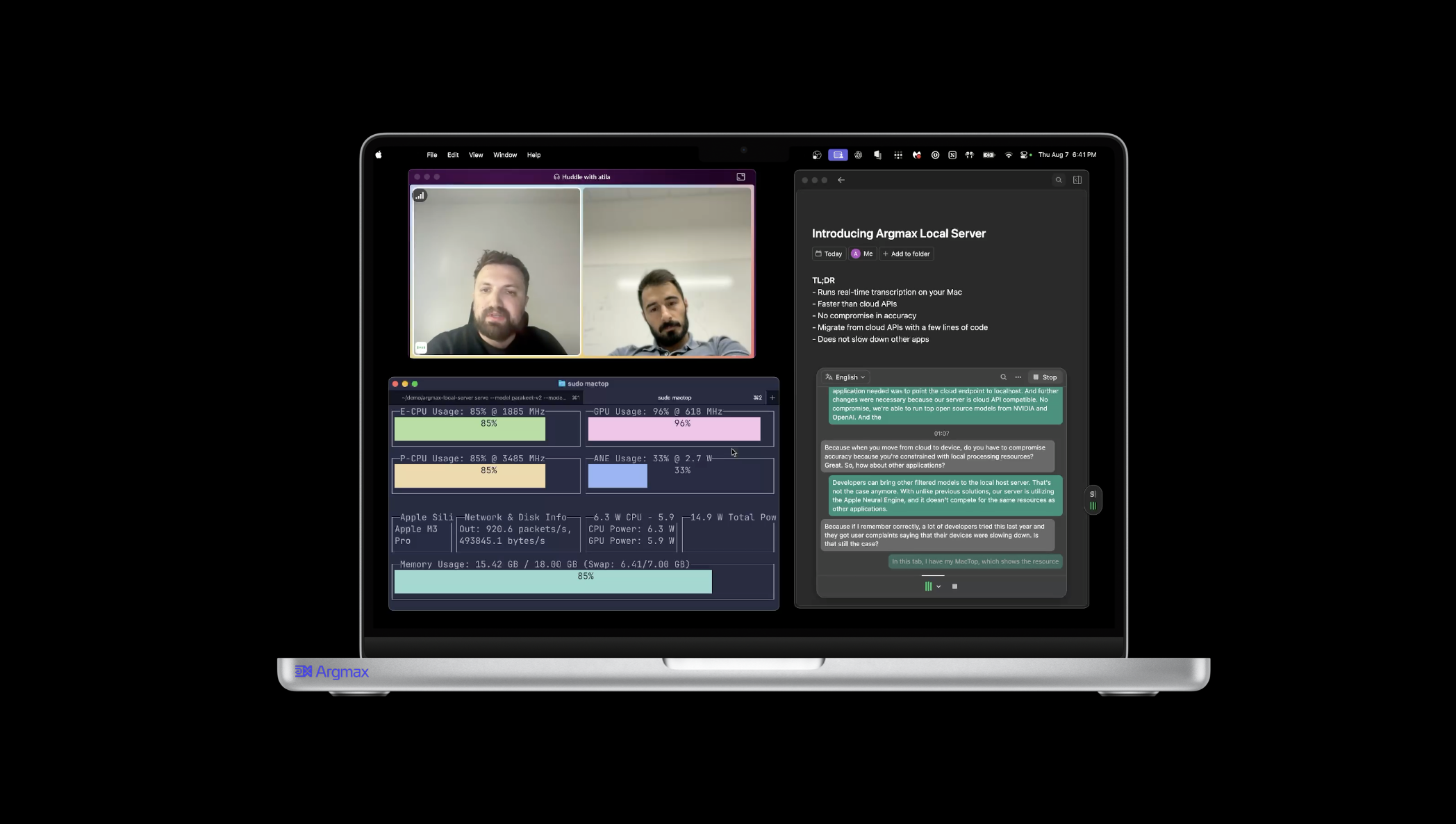

Argmax Local Server's WebSocket API is compatible with that of Deepgram Streaming Speech-to-text. The demo video above demonstrates a popular Electron app using Argmax Local Server with ZERO changes to app code when migrating from Deepgram. This is possible by simply switching the inference host URL from remote host (api.deepgram.com) to local host (localhost).

If your user experience requires speaker separation beyond system audio versus microphone, Argmax SDK SpeakerKit implements leading open-source speaker diarization systems such as pyannote-4.0 to separate and identify each and every meeting attendee. SpeakerKit also exclusively offers the best-in-market speaker diarization system on-device: pyannoteAI Precision-2.

SpeakerKit supports speaker diarization with prerecorded audio, which means that speaker diarization can be run at the end of each meeting. Real-time speaker diarization will be added to Argmax Local Server (and Enterprise SDK) in early 2026, get on the waitlist to be the first to hear and onboard when it ships!

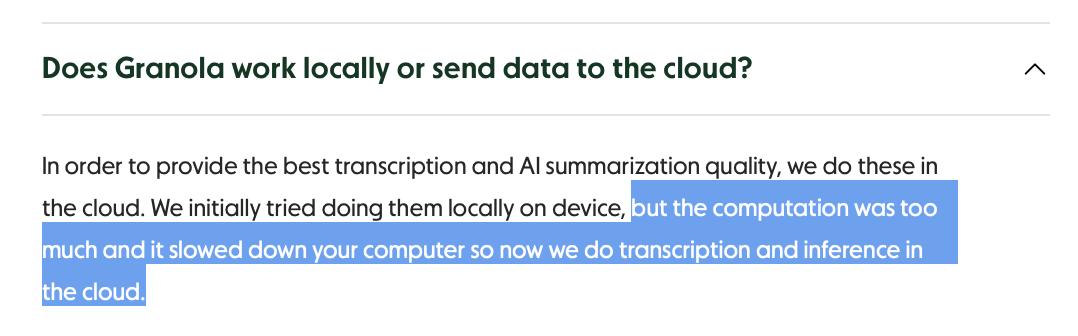

Before Argmax Local Server hit the market, many apps like Granola tried on-device inference and decided against it because CPU and GPU resource contention with other apps led to slowdowns and user complaints.

In our mission to make on-device the obvious architectural choice for audio model inference infrastructure, we solved this problem. See 1:31 in the video above for details on how.

To learn more about Argmax Local Server, request an Argmax Enterprise demo today!